AI Glitches are the static noise of LLM Culture

Large Language Models are showing persistent flaws that will shape our relationship to technology

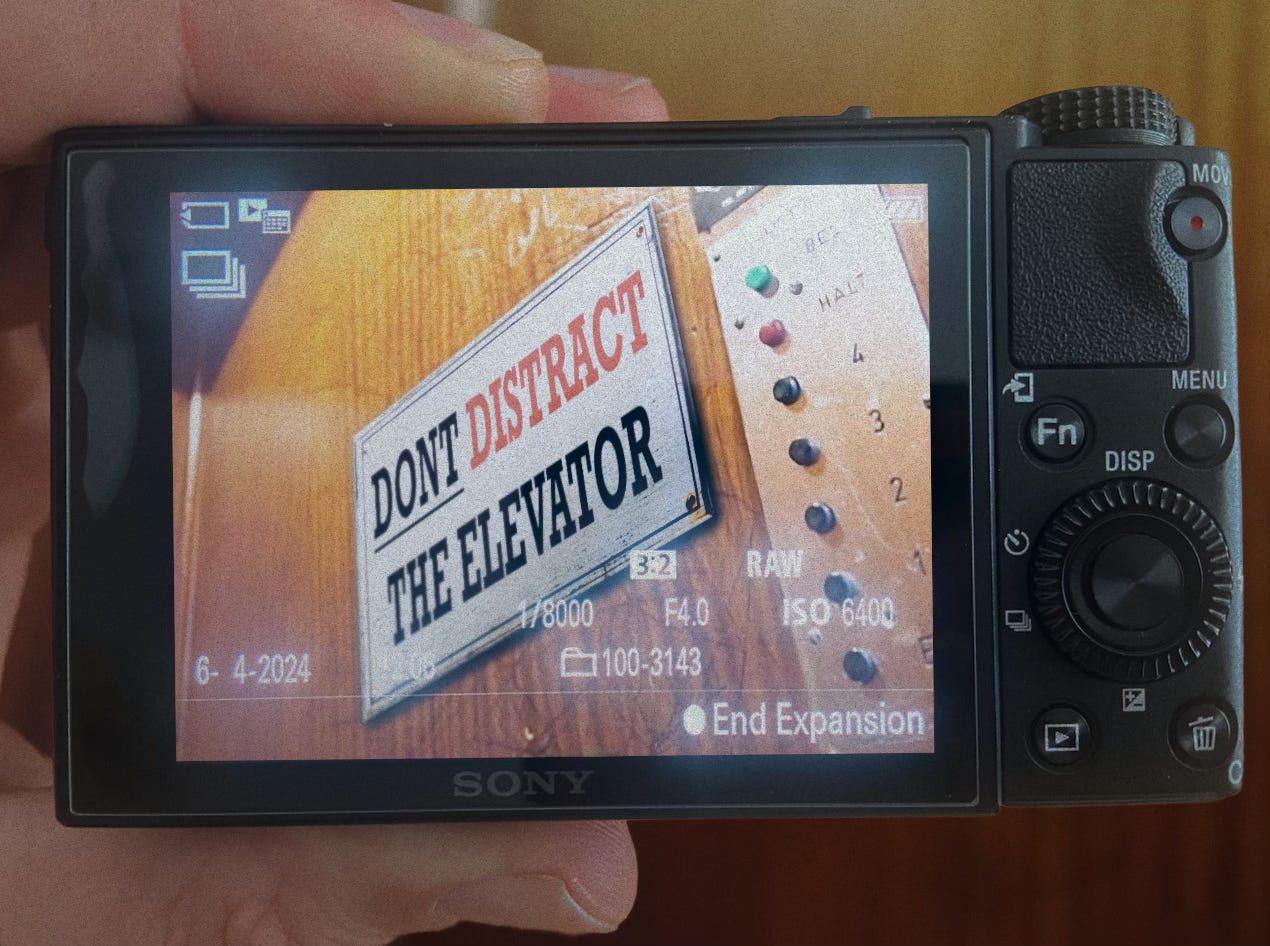

When interacting with devices, we apply our knowledge of causality to achieve what we desire. A button is green, we know "Green good, Red bad", so pushing a green button on a door will probably bring us closer to where we want to be than pushing a red button.

For interaction with digital technology, causality is not a material necessity anymore. Sculpting digital user workflows in consideration of common causality expectations became a central trait of User Experience Design that requires true craftmanship to achieve "seamingless" interaction without irritating friction. Luckily, traditional data processing devices behave on transparent algorithms that can be optimized and debugged precisely to form a relationship of cause and effect that feels intrinsically "right" based on common knowledge about the world. Most digital devices provided a perfect canvas for designing new interactions.

One might expect that sculpting comprehensible user workflows should become easier with AI-integrated devices, as their underlying models have been trained on incredibly large sets of knowledge about the world. But I think this will be not the case. Instead, the ongoing integration of AI into everyday devices1 will make technology interaction weirder than ever before.

Analysis of the recent previews of Sora, the impressive AI-Video-Generator revealed lots of significant flaws with physical causality and continuity in the details, "hallucinations" already well-known from Text-generating AI's.2 While the Sora developers rightly emphasize the early state of their research, critics question that these flaws will ever disappear. A central concern with OpenAI's vision of building a universal AI-powered world simulator3 is the fact that Sora is already pulling all kinds of smart levers to push ever more training data into the model to make it understand the world, while very obvious and basic flaws persist. Current AI's are able to produce impressive results, but they are a brute-force approach to making sense of reality.

Given that LLM's are not debuggable algorithms but a blackbox that can only be retrained and most applications are not based on newly developed models, I expect that optimization of user workflows towards a "seamless" behavior will be much more limited by financial constraints compared to an AI-Iess architecture that only required to rearrange some arrows in a flow diagrams. Therefore I assume the AI bugs we are seeing with todays Text- and Video-AIs will not disappear, but will be integrated into a new set of common knowledge. It will be interesting to see what kind of best practices will emerge within an emerging LLM-culture to deal with daily AI glitches.

We used to hit the analog television when it did not provide a proper image. How can we hit LLM's to make them behave the way we want them to?

Microsoft is fastest in bringing the latest OpenAI models to Microsoft products, Google is working on AI assistance for Google Docs and Apple is working on a deal to bring AI to iPhones eventually.